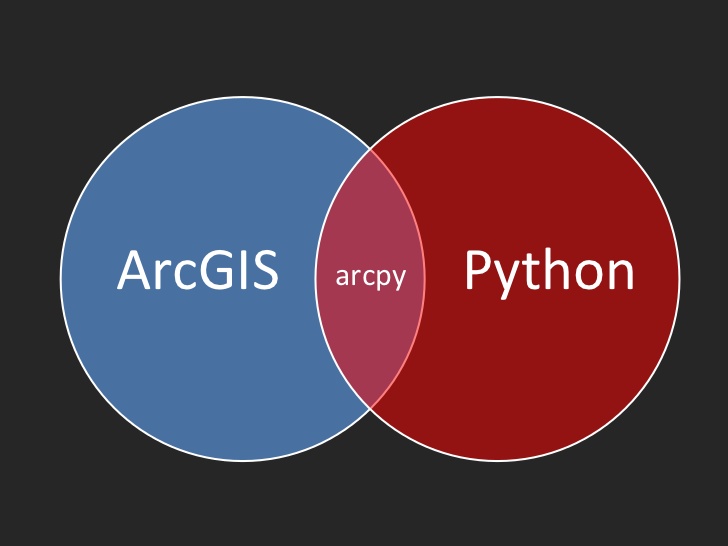

In this article we’ll examine how you can use Python with Arcpy and Numpy to create a list of unique attribute values from a field. While there are certainly other ways to do this either in ArcGIS (Desktop or Pro) or through SQL we’ll focus specifically on the needs of Python programmers working with Arcpy who need to generate a list of unique values for an attribute field.

For most of you the end goal of your script is not to generate a list of unique attribute values, but rather you need this list as part of a script that accomplishes a larger task. For example, you might want to write a script that creates a series of wildfire maps that will be exported to PDF files with each map displaying wildfires that were started in different ways. The layer containing wildfire information might have a FIRE_TYPE field containing values that reflect how the fire originated (Prescribed, Natural, Unknown). The initial processing of this script will need to generate a unique list of these values and then loop through the values and apply them in a definition query before exporting the map.

Let’s take a look at a couple examples of how this can be accomplished in Python with Arcpy. Keep in mind that there are certainly other ways that this can be done, but both these methods execute quickly and are easy to code.

Example 1: Arcpy Data Access Module using SearchCursor and Set Comprehension

In both examples, we’re using a shapefile called mtbs_perims_1984-2015_DD_20170501.shp that contains all the wildfire perimeters for the United States from 1984 through 2015. You can get more information on the MTBS wildfire data here. This shapefile includes 25,388 records and a field called FireType that contains an indicator for how the fire started.

Take a look at the script below and then we’ll discuss below.

import arcpy

import timeit

def unique_values(table, field): ##uses list comprehension

with arcpy.da.SearchCursor(table, [field]) as cursor:

return sorted({row[0] for row in cursor})

try:

arcpy.env.workspace = r"C:\MTBS_Wildfire\mtbs_perimeter_data"

start = timeit.default_timer()

vals = unique_values("mtbs_perims_1984-2015_DD_20170501.shp","Fir_eType")

stop = timeit.default_timer()

total_time = stop - start

print(total_time)

print(vals)

except Exception as e:

print("Error: " + e.args[0])

The first two lines of code simply import the arcpy and timeit modules. The timeit module is going to be used to measure how quickly the code executes.

We then created a function called unique_values(table, field). This function accepts a table and field as the two parameters. The table can be a stand-alone table or feature class. Inside the function a SearchCursor object is created using the table (or feature class) and field that were passed into the function. The final line of code is the key to this function. Pay attention to the snippet below.

{row[0] for row in cursor}

This is called set comprehension. The for row in cursor snippet initiates a for loop through each record in the feature class. The row[0] snippet simply indicates that the attribute field located at position 0 (FireType) is extracted for the current row. Notice that the entire snippet is surround by curly braces {row[0] for row in cursor}. This is called set comprehension and will automatically eliminate duplicates in your values. So essentially, that small segment of your code loops through all records in your shapefile (or feature class or table), extracts the attribute value for the FireType field, and removes duplicates. Finally, the contents of the returned set are sorted and returned.

sorted({row[0] for row in cursor})

The main block of this script sets the workspace (working folder), gets the start time of the call, calls the unique_values function, gets the end time of the call, calculates the amount of time to run the function, and prints out the unique values and total time it took to execute the function.

When this script is executed the result is as follows:

0.13076986103889876

[‘RX’, ‘UNK’, ‘WF’, ‘WFU’]

The first value is how long the script took to execute the unique_values function and then second is the sorted list of unique values. So it took just a fraction of a second to perform this operation against approximately 25,000 records.

Example 2: Using Numpy with Arcpy

The second example uses the numpy module with Arcpy to deliver the same results, using a different method. Numpy is a library for the Python programming language, adding support for large, multi-dimensional arrays and matrices, along with a large collection of high-level mathematical functions to operate on these arrays. Arcpy includes functions that allow you to move datasets between GIS and numpy structures. In this case the line below is used to convert a GIS table to a numpy array.

data = arcpy.da.TableToNumPyArray(table, [field])

import arcpy

import numpy

import timeit

def unique_values(table, field): ##uses numpy

data = arcpy.da.TableToNumPyArray(table, [field])

return numpy.unique(data[field])

try:

# mtbs_perims_1984-2015_DD_20170501.shp - 25,388 recs

arcpy.env.workspace = r"C:\MTBS_Wildfire\mtbs_perimeter_data"

start = timeit.default_timer()

vals = unique_values("mtbs_perims_1984-2015_DD_20170501.shp", "Fi_reType")

stop = timeit.default_timer()

total_time = stop - start

print(total_time)

print(vals)

except Exception as e:

print("Error: " + e.args[0])

The code is largely the same as the first example, with the exception that the unique_values function converts the input table (or feature class) to a numpy array, and calls the numpy.unique function on the input field to derive the list of unique values for the FireType field.

When this script is executed the result is as follows:

0.26176400513661063

[‘RX’ , ‘UNK’, ‘WF’, ‘WFU’]

The resulting data is the same as our first example. The execution time was a little slower, but still comparable. However, the memory footprint of using numpy arrays is generally thought to be much smaller. I didn’t test that here, but this is a common reason to use numpy arrays instead of set comprehension. This becomes particularly important if you are dealing with really large datasets containing hundreds of thousands or even millions of records.

More information: