Is your ArcGIS Pro crawling through large datasets? Does your 3D scene stutter when you navigate? Are you making coffee while waiting for geoprocessing tools to complete? You’re not alone—but you don’t have to accept poor performance as inevitable.

After years of optimizing ArcGIS Pro installations for organizations ranging from small municipalities to federal agencies, I’ve identified the settings and strategies that consistently deliver dramatic performance improvements. This guide will show you how to achieve significant, measurable speed increases in your workflows—with some users seeing improvements of 50% or more in specific operations.

The Three Pillars of Performance

Performance optimization in ArcGIS Pro rests on three foundational pillars: hardware configuration, software settings, and workflow design. While upgrading hardware might seem like the obvious solution, you’d be surprised how much performance you’re leaving on the table with suboptimal settings and inefficient workflows.

Hardware: Building the Right Foundation

GPU: Your Secret Weapon

Your graphics card is arguably the most important component for ArcGIS Pro performance, yet it’s often overlooked. Pro leverages GPU acceleration for both 2D and 3D rendering, and the right configuration can transform your experience.

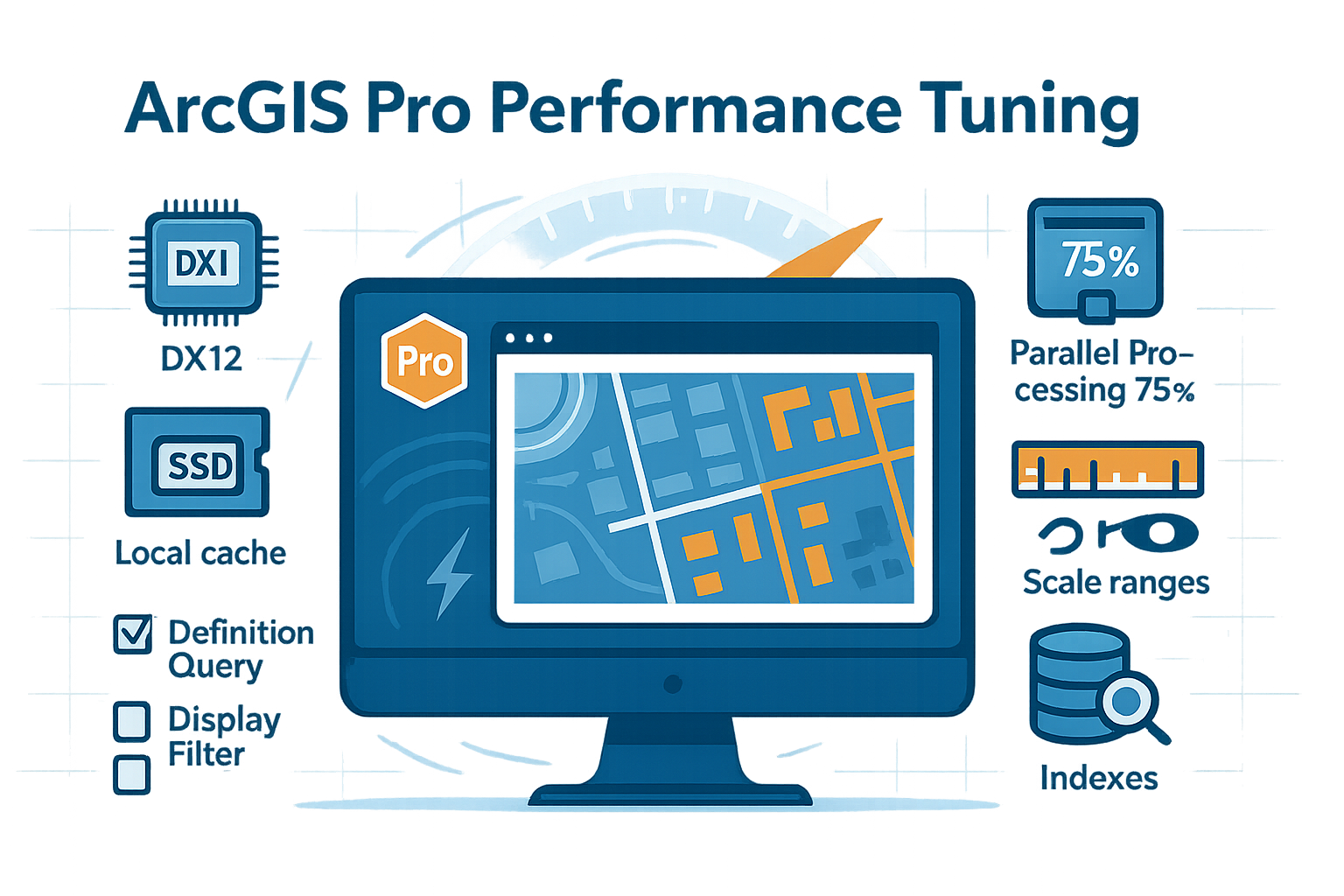

First, ensure ArcGIS Pro is using your dedicated graphics card rather than integrated graphics. Navigate to Project > Options > Display and check your rendering engine. For most modern GPUs, DirectX 12 offers the best performance, but some older cards work better with DirectX 11 or OpenGL. Don’t assume—test each option with your typical workflows.

Real-world impact: Switching from integrated Intel graphics to a dedicated NVIDIA RTX 3060 reduced map refresh time from 12 seconds to 2 seconds for a 1.2 million feature parcel dataset.

RAM: The Goldilocks Zone

While 8GB is the minimum, 16GB should be considered the baseline for professional work. However, throwing 64GB at the problem won’t necessarily help if your workflows don’t need it. Monitor your actual usage using Task Manager during typical workflows. If you’re consistently hitting 80% or more, an upgrade is warranted.

More important than quantity is ensuring your RAM is running at its rated speed. Many systems ship with RAM running below capacity. Check your BIOS settings—enabling XMP (Intel) or DOCP (AMD) profiles can provide a free 10-15% performance boost.

Storage Strategy That Works

Here’s a configuration that consistently delivers results:

- OS and ArcGIS Pro installation: Fast NVMe SSD (500GB minimum)

- Active projects and frequently-used data: Same SSD or secondary SSD

- Archive data: Traditional HDD or network storage

- Scratch workspace: Always local SSD, never network

Create a folder structure like this on your fastest drive:

C:\GISWork\

├── ActiveProjects\

├── LocalCache\

├── Scratch\

└── TempData\

Software Settings: The Hidden Performance Controls

Display Settings That Matter

Navigate to Project > Options > Display and adjust these critical settings:

Hardware Antialiasing: Turn this off unless you’re creating presentation materials. While the exact performance impact varies by GPU and data complexity, disabling it can provide noticeable improvements during analysis work.

Rendering Quality: Keep this at “High” for most work. Only move to “Ultra High” for final map production. The performance hit isn’t worth it for daily use.

Display Cache: This is crucial. Set your cache to a location on your fastest SSD:

- Click “Display Cache” settings

- Set cache location to

C:\GISWork\LocalCache - Set maximum cache size to at least 10GB

- Click “Clear Cache” if you haven’t done this in months

Local Cache for Services: Enable this for any web services you use regularly. It can reduce load times from 30 seconds to 2 seconds for frequently-accessed basemaps.

Project-Level Optimizations

Home Folder Location: Always set your home folder to a local drive, not a network location. Even with gigabit ethernet, network latency kills performance.

Database Connections:

- Use .sde database connection files (these are the standard direct connection method in ArcGIS Pro)

- Ensure database-side statistics are up to date

- Create indexes on frequently-queried fields

- Minimize high-latency network paths when possible

Recovery Settings: Increase the backup interval to 30 minutes if you’re experiencing slowdowns during saves. The default 5-minute interval can cause noticeable hiccups with large projects.

Parallel Processing: Use All Your Cores

Most users never touch this setting, leaving significant performance on the table:

- Navigate to Project > Options > Geoprocessing

- Set “Parallel Processing Factor” to 75%

This can significantly reduce processing time for tools that honor the parallel processing environment—though benefits vary by tool. Tools like Spatial Join, Buffer, and many raster processing tools can see substantial improvements on multi-core systems. Check the tool documentation to see if it supports parallel processing.

Workflow Optimization: Work Smarter, Not Harder

Layer Management Strategies

Display Filters vs Definition Queries: Understanding the difference is crucial for performance. Definition queries filter data at the source—reducing what’s retrieved from the database. Display filters affect what’s drawn while keeping all data available for analysis. Each has different performance implications depending on your data source and workflow. Test both approaches with your specific datasets to determine which performs better.

Scale-Dependent Rendering: Set appropriate scale ranges for every layer:

- Parcels: Don’t show below 1:50,000

- Building footprints: Don’t show below 1:25,000

- Utility features: Don’t show below 1:10,000

Implementation example:

import arcpy

def set_scale_dependency(layer_name, min_scale, max_scale):

"""Set scale dependency for a layer"""

aprx = arcpy.mp.ArcGISProject("CURRENT")

m = aprx.activeMap

for lyr in m.listLayers():

if lyr.name == layer_name:

lyr.minThreshold = min_scale

lyr.maxThreshold = max_scale

print(f"Scale set for {layer_name}: {min_scale} - {max_scale}")

break

Data Preparation Best Practices

Spatial Indexing: This is free performance. Always rebuild spatial indexes after major edits:

arcpy.management.AddSpatialIndex(

in_features="YourFeatureClass",

spatial_grid_1=0 # 0 lets ArcGIS calculate optimal size

)

Attribute Indexing: Index any field you regularly query, join, or relate:

arcpy.management.AddIndex(

in_table="YourTable",

fields="YourField",

index_name="IDX_YourField"

)

Data Generalization: Create simplified versions for small-scale viewing:

- Use the Simplify Polygon tool with a tolerance appropriate to your scale

- Create a “_generalized” version of complex features

- Use scale-dependent layer switching

Basemap Optimization

Stop letting basemaps slow you down:

- Use Vector Tile Basemaps: Generally more efficient than raster tiles for many use cases

- Enable Local Caching: For web services, enable feature and layer caching for frequently-used areas

- Build Pyramids for Local Rasters: For local raster datasets (not web services), always build pyramids

- Consider “Light Gray Canvas”: It’s one of the fastest-rendering Esri basemaps

Real-World Performance Wins

Case Study 1: Municipal Parcel Management

Challenge: City GIS with 500,000 parcels, 20-second draw times, analysis tools timing out

Optimizations Applied:

- Added spatial indexes to all feature classes

- Implemented scale-dependent rendering (parcels invisible below 1:40,000)

- Switched from definition queries to display filters for visualization

- Moved project and data to local SSD

- Enabled hardware acceleration

- Set parallel processing to 75%

Results:

- Draw time: 20 seconds → 3 seconds

- Spatial Join (parcels to zoning): 5 minutes → 45 seconds

- Select by Location: 30 seconds → 4 seconds

- Overall workflow improvement: 65% faster

Case Study 2: 3D City Modeling

Challenge: 3D scene with 50,000 building footprints, choppy navigation at 8-12 fps

Optimizations Applied:

- Switched from OpenGL to DirectX 12

- Created LOD (Level of Detail) representations

- Implemented distance-based visibility

- Optimized texture sizes and mesh complexity

- Updated GPU drivers

- Enabled mesh simplification

Results:

- Navigation: 8-12 fps → 45-60 fps

- Scene load time: 90 seconds → 25 seconds

- Memory usage: Reduced by 40%

The Quick Wins Checklist

Start with these changes that take less than 5 minutes but deliver immediate results:

- [ ] Enable hardware acceleration (Project > Options > Display)

- [ ] Set display cache to local SSD with 10GB+ size

- [ ] Turn off hardware antialiasing for daily work

- [ ] Enable parallel processing at 75%

- [ ] Clear your display cache if it’s been months

- [ ] Set scale ranges for detailed layers

- [ ] Switch to vector tile basemaps

- [ ] Rebuild spatial indexes on your main datasets

- [ ] Increase project backup interval to 30 minutes

- [ ] Use display filters instead of definition queries where appropriate

Performance Monitoring Script

Use this Python script to benchmark your optimizations:

import time

import arcpy

import statistics

def benchmark_tool_performance(tool_function, iterations=5):

"""

Benchmark geoprocessing tool performance

Run before and after optimizations

"""

times = []

print(f"Running {iterations} performance tests...")

# Test with different parallel processing settings

original_setting = arcpy.env.parallelProcessingFactor

for i in range(iterations):

start = time.time()

tool_function() # Your geoprocessing operation

end = time.time()

elapsed = end - start

times.append(elapsed)

print(f"Test {i+1}: {elapsed:.2f} seconds")

# Restore original setting

arcpy.env.parallelProcessingFactor = original_setting

# Calculate statistics

avg_time = statistics.mean(times)

med_time = statistics.median(times)

print(f"\n--- Results ---")

print(f"Average: {avg_time:.2f} seconds")

print(f"Median: {med_time:.2f} seconds")

print(f"Best: {min(times):.2f} seconds")

print(f"Worst: {max(times):.2f} seconds")

return avg_time

# Example usage:

def my_spatial_join():

"""Example geoprocessing operation to benchmark"""

arcpy.analysis.SpatialJoin(

target_features="parcels",

join_features="zoning",

out_feature_class="memory/temp_join"

)

# Run the benchmark

baseline = benchmark_tool_performance(my_spatial_join)

Common Performance Killers to Avoid

Label Expression Complexity: Every label is calculated on every redraw. Simplify expressions or pre-calculate label fields.

Unindexed Joins: Joining tables without indexes on join fields can increase processing time by 10-100x.

Network Data Without Caching: Every pan requires a network round-trip. Always cache frequently-used network data locally.

Too Many Visible Layers: Just because you can load 200 layers doesn’t mean you should. Use group layers and visibility presets.

Complex Symbology at All Scales: Detailed symbology should be scale-dependent. Use simpler symbols at smaller scales.

Large Numbers of Layers: Just because you can load 200 layers doesn’t mean you should. Use group layers and visibility presets to manage complexity.

The Performance Matrix: Effort vs. Impact

Focus your efforts where they’ll have the most impact:

High Impact, Low Effort:

- Enable hardware acceleration

- Set parallel processing

- Clear display cache

- Use local storage

High Impact, High Effort:

- Optimize data schemas

- Implement scale-dependent rendering

- Create generalized datasets

- Build comprehensive indexing

Low Impact, Low Effort:

- Increase backup intervals

- Disable unnecessary extensions

- Close unused panes

Low Impact, High Effort:

- Marginal hardware upgrades

- Complex caching schemes

- Over-optimization of rarely-used data

Your Next Steps

- Benchmark your current performance using the provided script

- Implement the quick wins from the checklist

- Test each optimization with your specific workflows

- Document what works for your organization

- Share your results with your team

Remember, performance optimization isn’t a one-time task—it’s an ongoing process. As your data grows and workflows evolve, regularly revisit these optimizations. The time you invest in performance tuning will pay dividends in productivity every single day.

Start with the quick wins today. Your future self will thank you when that massive spatial join completes in seconds rather than minutes, and your maps refresh instantly instead of leaving you staring at a spinning wheel.

Additional Resources

- ArcGIS Pro Performance Best Practices

- Hardware Requirements and Recommendations

- Optimize Map Performance